Blog

What you need to know about conformity assessments under the EU AI Act

What are conformity assessments? Who’s responsible for them, and why do they matter? Learn more about these assessments and how they relate to your AI governance program

Lauren Diethelm

AI Content Marketing Specialist

November 20, 2023

The draft EU AI Act takes a precautionary and risk-based approach to AI, and to do this has laid out four different risk categories that AI systems can be sorted into. These risk categories are designed to protect the health, safety, and fundamental human rights of people coming into contact with AI systems.

Systems designed as high-risk aren’t banned from use, but they do have more requirements they need to meet before they can be deployed to the public, and one of those requirements is undergoing a conformity assessment (or CA).

Download the guide, developed in partnership with Future of Privacy Forum, here.

What is a conformity assessment?

Article 3 of the draft AI Act defines conformity assessments as the process of verifying and/or demonstrating that a high-risk system complies with certain requirements laid out in the Act. These requirements are:

- Risk management system

- Data governance

- Technical documentation

- Record keeping

- Transparency and provision of information

- Human oversight

- Accuracy, robustness, and cybersecurity

Providers of high-risk AI systems have to meet these requirements before their system can be put on the market, protecting individuals from the potential harms a high-risk system poses. CAs are key tools when it comes to accountability of high-risk systems, so understanding how and where they work will be equally key to your AI governance program.

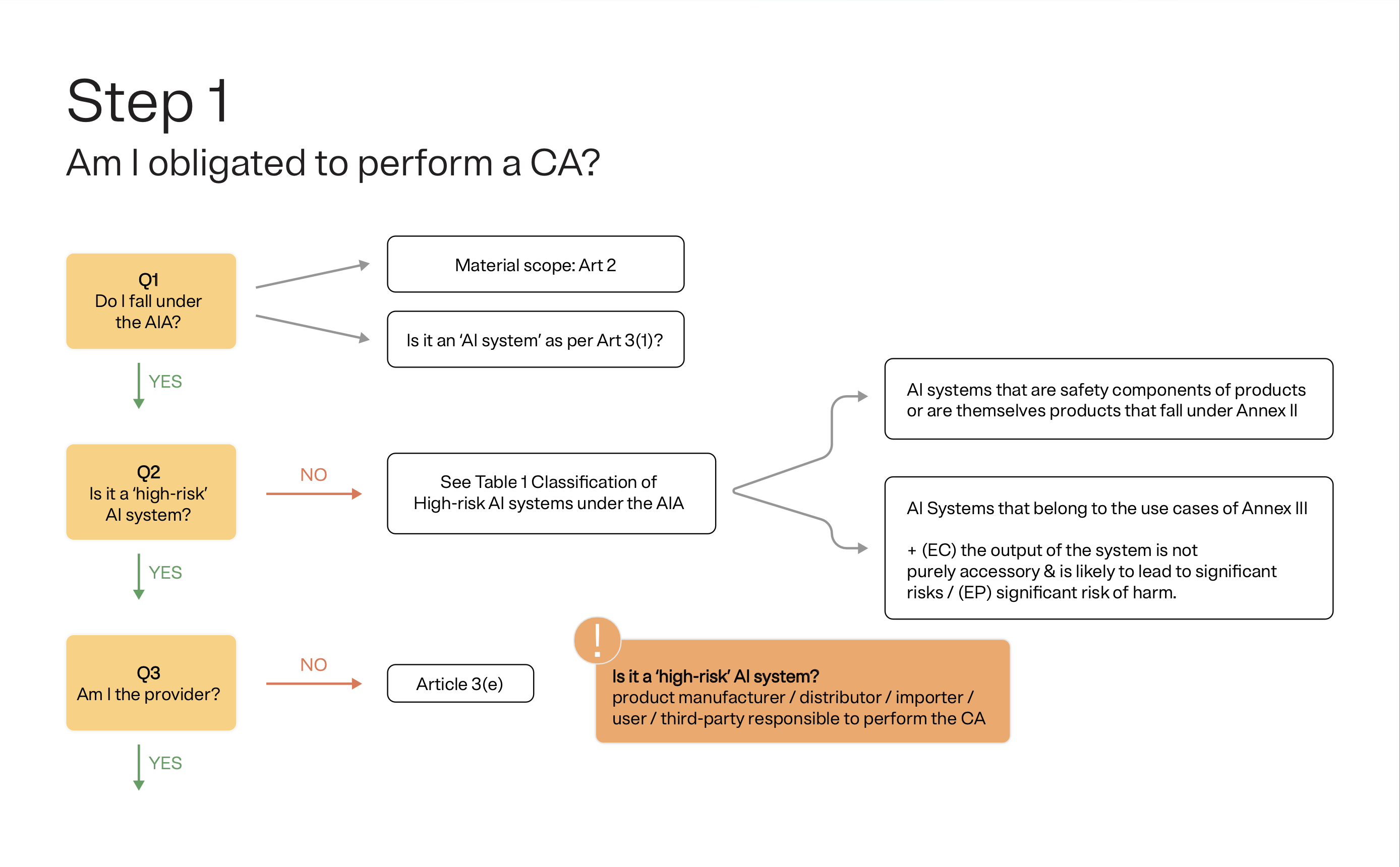

Do I need to conduct a conformity assessment?

Whether or not you need to perform a CA depends on the answers to several questions. Here’s how you can determine if you do actually need to conduct a CA:

Firstly, do you fall under the jurisdiction of the AI Act? And is the system you're hoping to use actually considered an AI system by Article 3(1) in the Act? If not, you’re done – you don’t need to conduct a CA. Same for if your system isn’t considered a high-risk system; only systems categorized as high risk by the EU AI Act are required to undergo a conformity assessment.

Download the full infographic: EU AIA Conformity Assessment: A step-by-step guide

The other determining factor is your role in the system. If you’re the provider of the system or a responsible actor, then you are responsible for conducting the CA. Usually the provider and responsible actor will be the same party, but there are certain exceptions where the responsible actor is someone else.

Responsible actors may be a distributor, importer, deployer, or other third party that puts AI to use under their name or trademark. The exact legal requirements for if or when someone other than the provider would be required to perform the CA haven’t yet been set.

When should I do a conformity assessment?

Once you’ve determined that a CA is required, it becomes a matter of when. There is a correct timeline of when conformity assessments should be done:

- Ex ante (before the event): The CA has to be conducted before the AI system gets placed on the EU market – meaning before making it available for public use.

- Ex post (after the event): After a high-risk system has been placed on the market, a new CA is required when/if the system undergoes substantial modifications. It’s worth noting this doesn’t mean that as the system continues to learn (as models do) after being placed on the market; rather, it refers to any changes that would significantly affect the system’s compliance with the requirements measured by the CA.

Who actually conducts conformity assessments?

Conformity assessments can be conducted in two ways: either internally, or by a third-party process. As the names suggests, internal CAs are conducted by the provider (or the responsible actor) while third-party CAs are conducted by an external “notified body.”

Article 43 of the AI Act lays out more explicit guidance on which cases require an internal CA and which ones should go through a third-party process; you can also find more detailed steps for each process in this step-by-step guide.

Putting conformity with requirements for high-risk systems into practice

As mentioned earlier, CAs aim to verify that high-risk systems comply with all seven requirements – risk management, data governance, technical documentation, record keeping, transparency obligations, human oversight, and accuracy, robustness, and cybersecurity – laid out in the AI Act.

Unless specified otherwise, all these requirements should be met before the AI system is put into use or enters the market. Once the system is in use, the provider must also ensure continuous compliance throughout the system’s lifecycle.

When evaluating these requirements, the intended purpose of the use of the system needs to be taken into account, as does the reasonably foreseeable misuse of that system.

Use this guide to dive deeper into each of the different requirements and to see how compliance and implementation plays out for each of them.

You may also like

Webinar

AI Governance

Governing data for AI

In this webinar, we’ll break down the AI development lifecycle and the key considerations for teams innovating with AI and ML technologies.

June 04, 2024

Webinar

AI Governance

Embedding trust by design across the AI lifecycle

In this webinar, we’ll look at the AI development lifecycle and key considerations for governing each phase.

May 07, 2024

Webinar

AI Governance

Data privacy in the age of AI

In this webinar, we’ll discuss the evolution of privacy and data protection for AI technologies.

April 17, 2024

Webinar

AI Governance

AI regulations in North America

In this webinar, we’ll discuss key updates and drivers for AI policy in the US; examining actions being taken by the White House, FTC, NIST, and the individual states.

March 05, 2024

Webinar

AI Governance

Global trends shaping the AI landscape: What to expect

In this webinar, OneTrust DataGuidance and experts will examine global developments related to AI, highlighting key regulatory trends and themes that can be expected in 2024.

February 13, 2024

Webinar

AI Governance

The EU AI Act

In this webinar, we’ll break down the four levels of AI risk under the AI Act, discuss legal requirements for deployers and providers of AI systems, and so much more.

February 06, 2024

Webinar

Responsible AI

Preparing for the EU AI Act: Part 2

Join Sidley and OneTrust DataGuidance for a reactionary webinar to unpack the recently published, near-final text of the EU AI Act.

February 05, 2024

Checklist

AI Governance

Questions to add to existing vendor assessments for AI

Managing third-party risk is a critical part of AI governance, but you don’t have to start from scratch. Use these questions to adapt your existing vendor assessments to be used for AI.

January 31, 2024

Webinar

AI Governance

Getting started with AI Governance

In this webinar we’ll look at the AI Governance landscape, key trends and challenges, and preview topics we’ll dive into throughout this masterclass.

January 16, 2024

Webinar

AI Governance

Building your AI inventory: Strategies for evolving privacy and risk management programs

In this webinar, we’ll talk about setting up an AI registry, assessing AI systems and their components for risk, and unpack strategies to avoid the pitfalls of repurposing records of processing to manage AI systems and address their unique risks.

December 19, 2023

Webinar

Responsible AI

Preparing for the EU AI Act

Join Sidley and OneTrust DataGuidance for a reactionary webinar on the EU AI Act.

December 14, 2023

Webinar

Consent & Preferences

Marketing Panel: Balance privacy and personalization with first-party data strategies

Join this on-demand session to learn how you can leverage first-party data strategies to achieve both privacy and personalization in your marketing efforts.

December 04, 2023

Webinar

AI Governance

Revisiting IAPP DPC: Top trends from IAPP's privacy conference in Brussels

Join OneTrust and KPMG webinar to learn more about the top trends from this year’s IAPP Europe DPC.

November 28, 2023

eBook

AI Governance

Navigating the draft EU AI Act

With the use of AI proliferating at an exponential rate, the EU is in the process of rolling out a comprehensive, industry-agnostic regulation that looks to minimize AI’s risk while maximizing its potential.

November 17, 2023

eBook

Responsible AI

Conformity assessments under the proposed EU AI Act: A step-by-step guide

Conformity Assessments are a key and overarching accountability tool introduced by the EU AI Act. Download the guide to learn more about the Act, Conformity Assessments, and how to perform one.

November 17, 2023

Infographic

Responsible AI

EU AIA Conformity Assessment: A step-by-step guide

A Conformity Assessment is the process of verifying and/or demonstrating that a “high- risk AI system” complies with the requirements of the EU AI Act. Download the infographic for a step-by-step guide to perform one.

November 17, 2023

White Paper

AI Governance

AI playbook: An actionable guide

What are your obligations as a business when it comes to AI? Are you using it responsibly? Learn more about how to go about establishing an AI governance team.

October 31, 2023

Webinar

AI Governance

AI governance masterclass

Navigate global AI regulations and identify strategic steps to operationalize compliance with the AI governance masterclass series.